Branch out: new control components for more resilient workflows

Data analysis rarely follows a single straight path. Depending on the conditions in your data - or the business rules you’re working with - you often need your workflows to adapt on the fly.

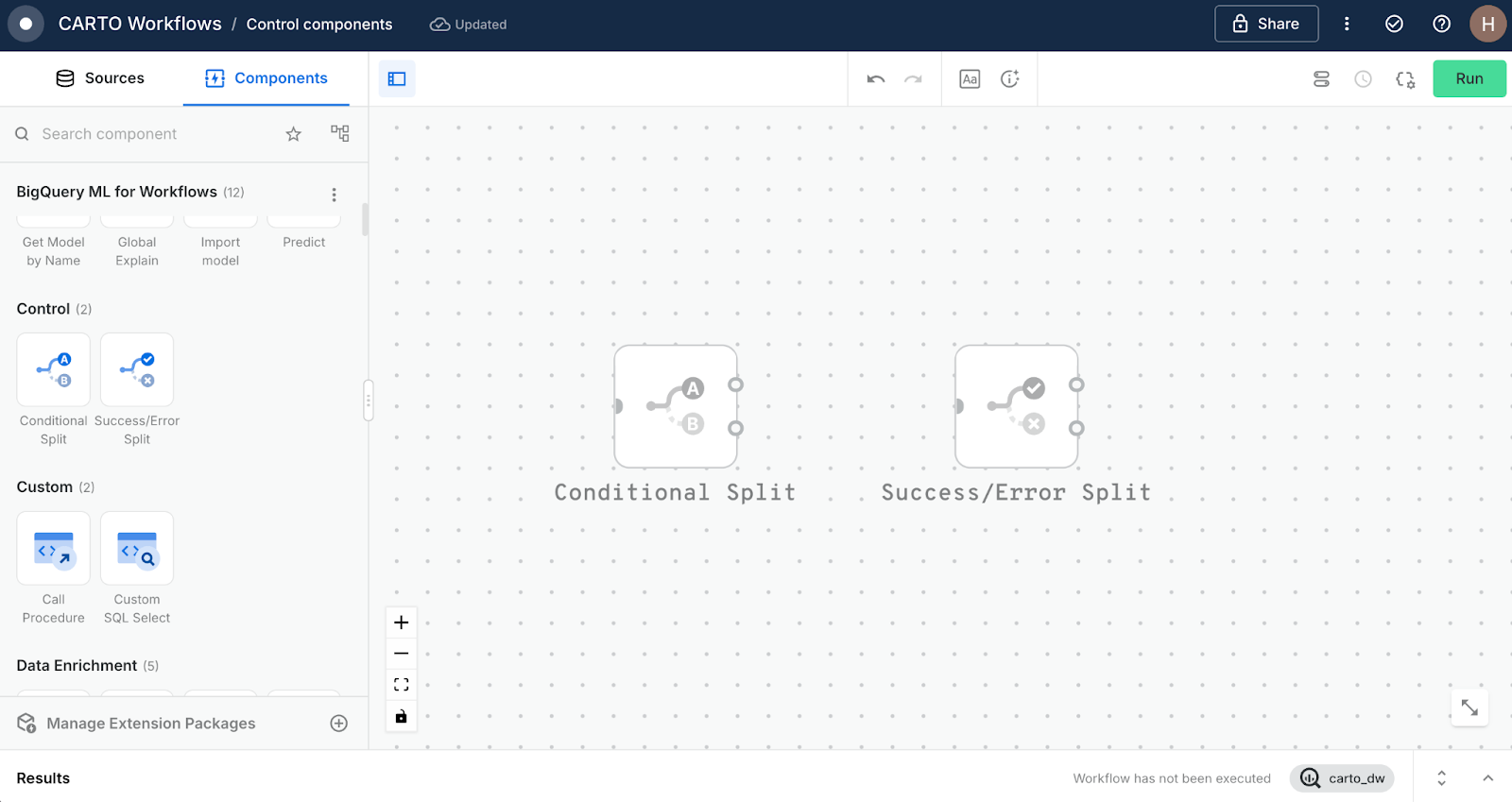

That’s why we’ve added two new control components to CARTO Workflows, our low-code tool for automating spatial analysis pipelines, right in your cloud data warehouse. The new Conditional Split and Success / Error Split components give you the flexibility to route data or actions dynamically, handle errors without manual intervention, and keep your processes clean and resilient.

Keep reading to learn more and see these in action!

These two new components let you introduce decision-making directly into your workflows:

- Conditional Split: Route your data down different paths depending on a rule you define, evaluating any aspect of the input data. For example, if the count of underserved households in a service area is greater than 500, run a fiber expansion workflow; otherwise, flag the area for wireless coverage.

- Success / Error Split: Branch your workflow based on whether the previous step succeeded or failed. This could be if a geocoding process fails, trigger a fallback geocoder; otherwise continue with your risk assessment model.

Instead of building brittle one-size-fits-all flows that force you to manually rerun failed steps, you can now create adaptable, context-aware workflows. These can adapt instantly to what’s happening with your data, recover from errors without the need for manual fixes, and help keep your analysis tidy and maintainable.

Let’s explore five ways you can put them to work!

Want to try this yourself? Sign up for a 14-day free trial here! You can also find examples of these new components in our Workflows templates! In the CARTO Workspace, simply head to Workflows > Create a new workflow > From template, and search “split.” Check out our full suite of Workflows templates here.

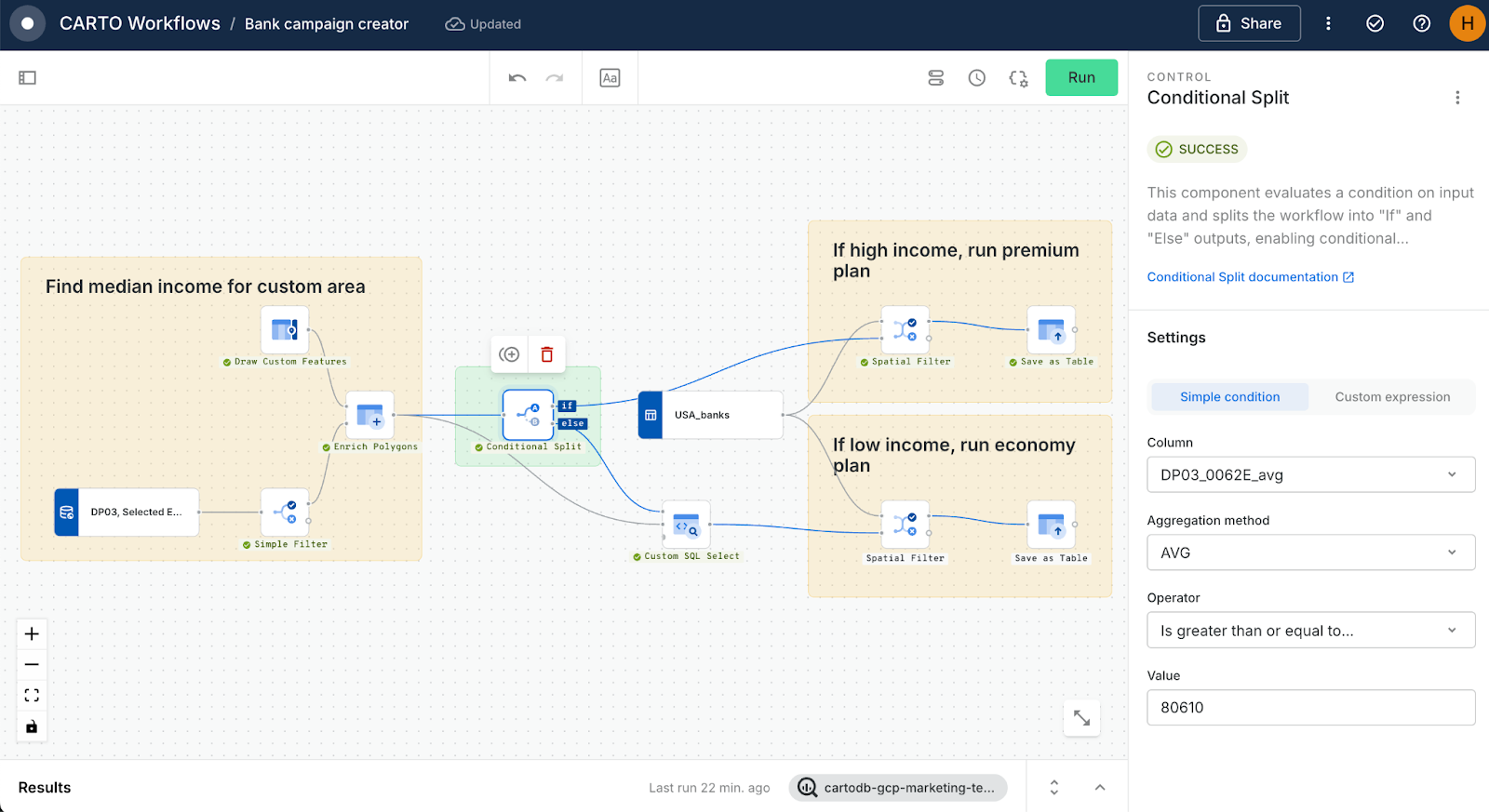

Branched analytics lets you design workflows that adapt their processing paths based on live thresholds in your data. Instead of locking into a single route, you can evaluate each dataset segment and decide where it should go - whether that’s applying a more advanced model, escalating to a specialist team, or pushing through an automated process. This approach makes pipelines more efficient, ensures the right resources are used for the right cases, and keeps operations responsive to changing conditions. Here’s a simple example:

This workflow calculates the median household income for a custom area. A Conditional Split component then checks whether the residents have a median income that is higher or lower than the national average. You can see here that the components in the upper “higher income” branch have turned green, indicating that this is the “live” branch.

Based on the result, all banks within the area are identified, and the outcomes are written to a cloud table - ready to power downstream actions such as delivering targeted marketing, planning staff training, or adjusting resource allocation strategies.

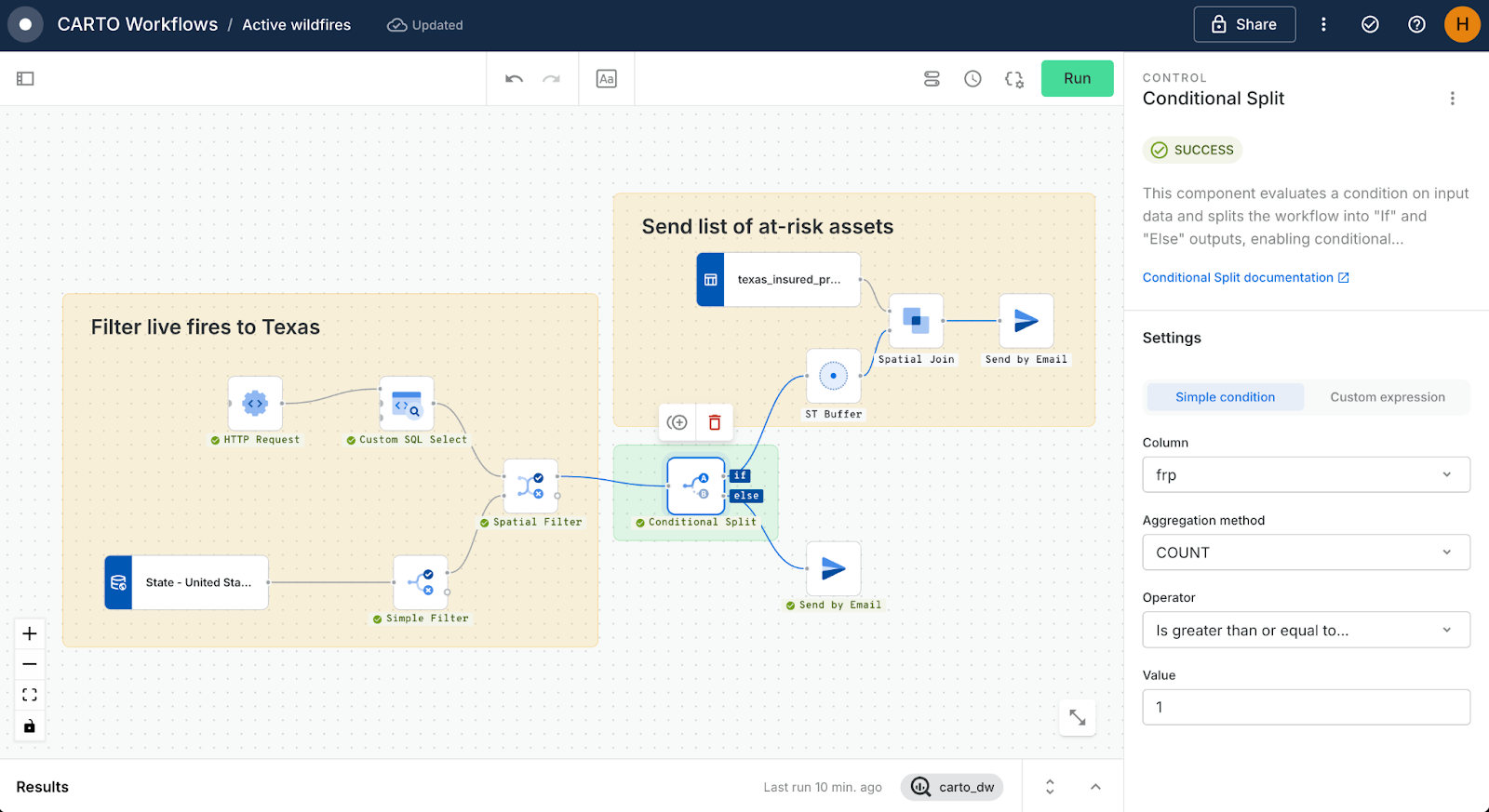

Keeping teams informed is critical, but the type of notification often depends on the situation. With control components, you can build workflows that automatically adapt how they communicate, delivering detailed information when action is needed - or providing reassurance when it’s not. This ensures notifications are always relevant, timely, and grounded in data.

This workflow monitors live fire data from NASA’s Fire Information for Resource Management System (FIRMS) API, filtered to the state of Texas. A Conditional Split component determines:

- Lower branch: If no wildfires are present, the workflow sends a simple email confirming that conditions are clear. You can see that this is the branch that was followed on the day this was run.

- Upper branch: If wildfires are detected, the workflow instead identifies insured assets within five miles of each fire, then sends a detailed notification listing those at-risk properties.

In both cases, the team receives an update - but the content is automatically tailored to the situation. This avoids unnecessary manual checks and ensures that when risk does arise, the alert includes the exact information needed for a quick response.

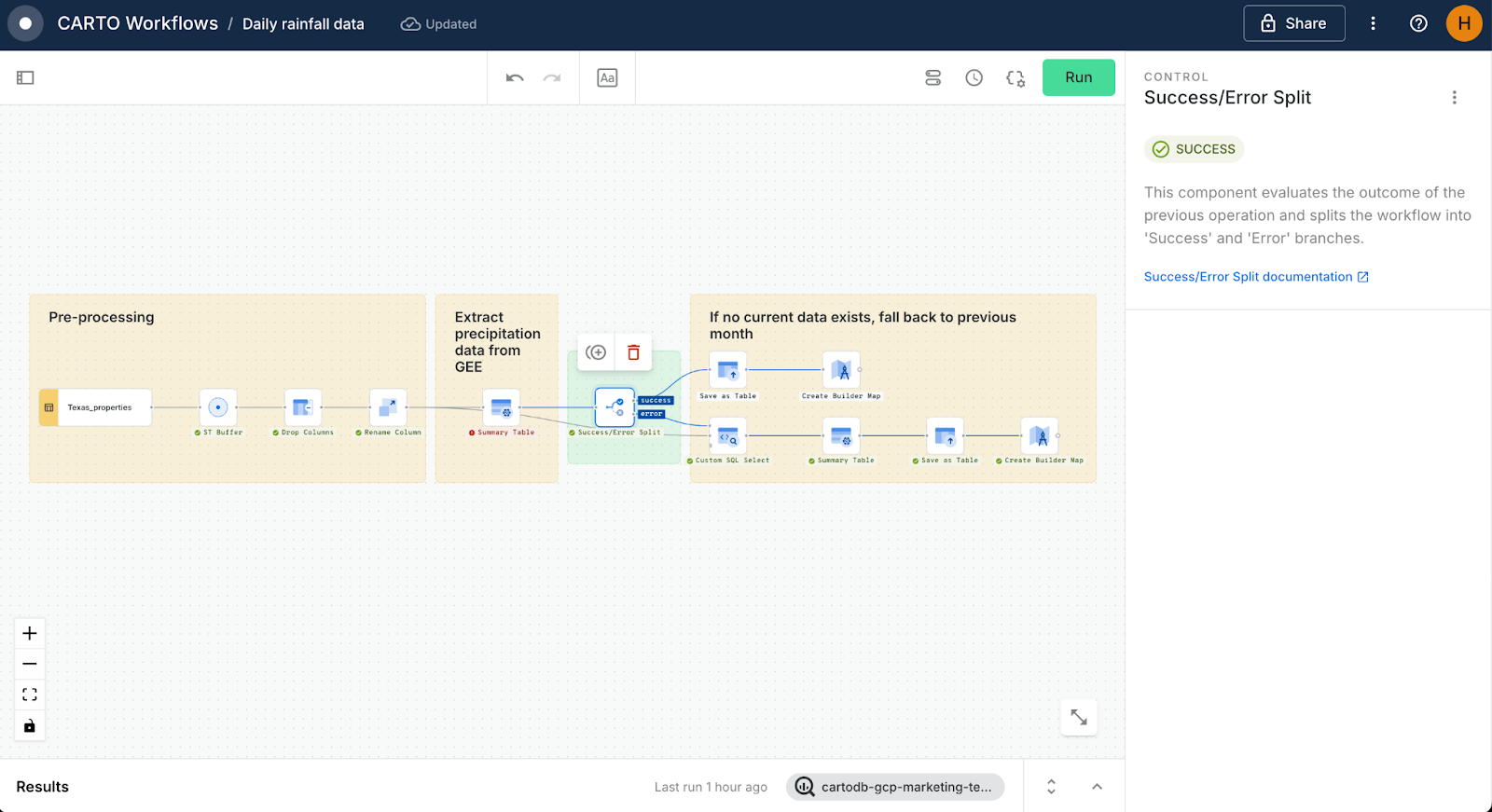

Automated fallback logic ensures your workflows keep running smoothly even when data or services fail. By defining alternate paths or backup data sources, you can prevent interruptions and maintain reliable outputs, without having to manually patch or restart processes. This makes workflows far more resilient and gives teams confidence that results are always complete and actionable.

This workflow is monitoring rainfall levels for a series of 10,000 properties across Texas via the Google Earth Engine CARTO Workflows Extension Package. The initial Summary Table component attempts to find the average rainfall for the current date. If this process fails, a Success/Error Split component (highlighted in green) routes the analysis through a different Summary Table component which calculates the average rainfall for the previous month. In both branches, the results are added to the CARTO Builder map below (open in full-screen here).

When the quality of your data determines the success of downstream processes, you can’t afford to let bad records slip through unnoticed. A data quality gate acts as a checkpoint in your workflow, evaluating datasets against a rule or threshold before deciding whether they should move forward - reducing errors, saving time, and preventing costly rework later.

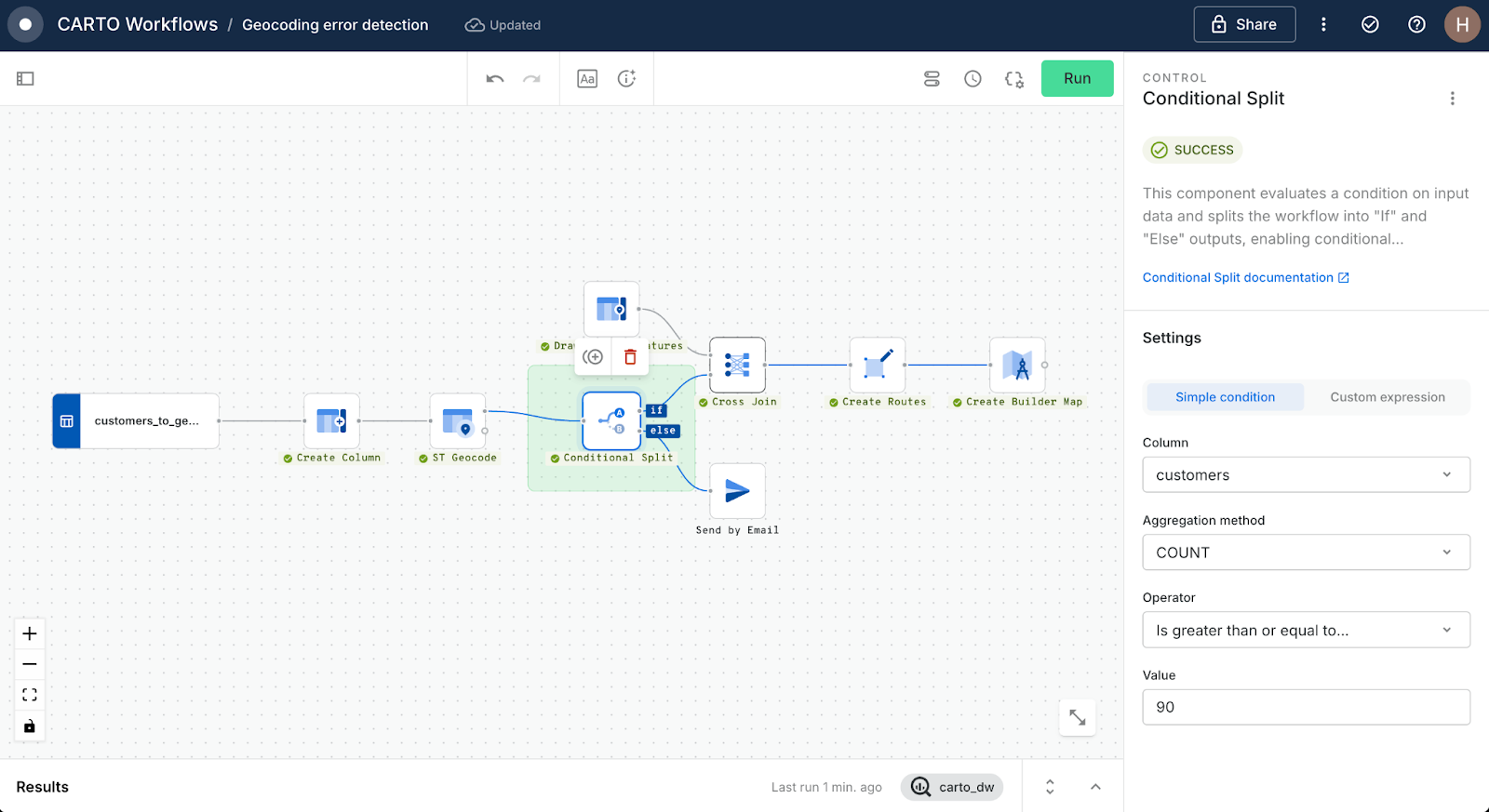

This example validates address accuracy for a logistics company. First, the addresses are geocoded, after which a Conditional Split is used to check whether more than 10% of addresses failed to return valid coordinates. If the failure rate exceeds that threshold, the data is sent back to the provider for cleanup. If it’s below the limit, it’s routed straight into delivery planning and routing. This simple checkpoint prevents wasted driver time, misdeliveries, and downstream customer service issues.

When running spatial analysis, it’s common for tools and APIs to come with hard thresholds on how much data they can process at once. Instead of hitting errors or manually pre-processing your data, you can use a Conditional Split to dynamically adjust how the workflow behaves when those limits are exceeded.

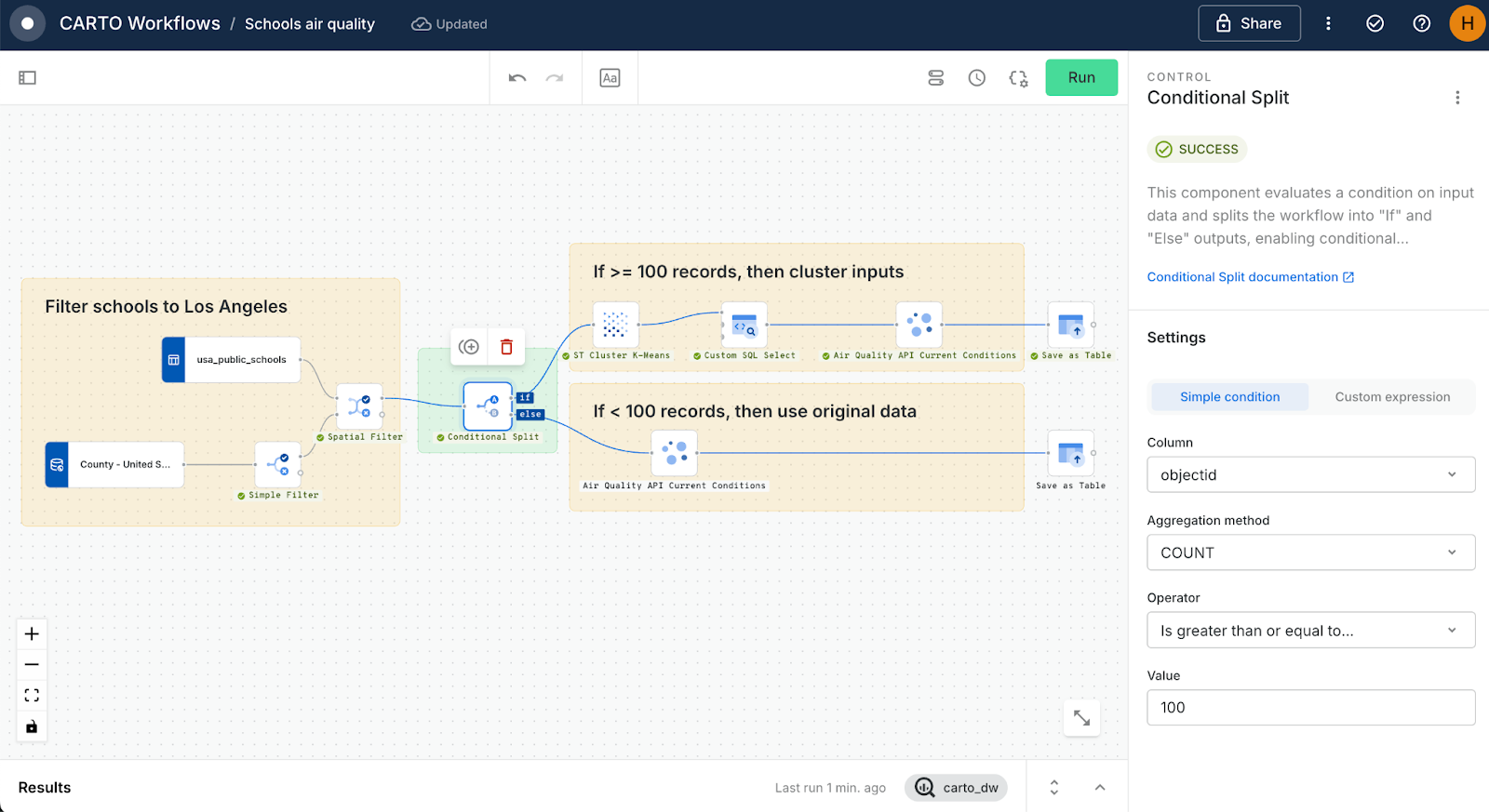

This workflow is monitoring real-time air quality for schools across Los Angeles using the Google Environment APIs CARTO Workflows Extension Package. To ensure this analysis does not exceed the Air Quality API Current Conditions quota of 1,000 calls per project, a Conditional Split is applied:

- Lower branch: If fewer than 100 schools are analyzed, the API is called directly for each school.

- Upper branch: If 100 or more are included, the schools are clustered, median centers are calculated, and the API is called only for those centers. You can see this is the condition met for this workflow run.

You can explore the results of this in the map below (open in full-screen here).

This keeps analysis scalable, prevents manual juggling of API quotas, and still supports decisions around health advisories and transport planning.

Control components bring resilience, adaptability, and intelligence to spatial workflows. By branching logic based on data conditions, handling errors gracefully, enforcing quality gates, and working around tool limits, you can design pipelines that stay robust even under pressure.

Ready to try it yourself? Start your free 14-day trial today!

.png)

.png)